Recent developments across the artificial intelligence landscape emphasize a growing focus on efficiency, aiming to deliver more powerful AI capabilities while optimizing resource utilization and reducing computational costs. This shift is crucial because as AI models grow in complexity, their energy consumption and operational expenses also increase, potentially hindering widespread adoption and creating environmental concerns. The industry’s response involves innovations in model architecture, training methodologies, and hardware acceleration, all contributing to a new era where AI News Today reflects advancements in sustainable and accessible AI technologies.

Contents

Optimizing AI Models for Efficiency

The pursuit of efficiency in AI centers on several key areas, including model compression, algorithmic optimization, and hardware acceleration. Model compression techniques, such as pruning and quantization, reduce the size and complexity of AI models without significantly sacrificing accuracy. Algorithmic optimizations involve refining the underlying algorithms to perform computations more efficiently. Hardware acceleration leverages specialized hardware, like GPUs and TPUs, to speed up AI workloads. These efforts collectively contribute to AI systems that are faster, more energy-efficient, and more cost-effective to deploy.

Model Compression Techniques

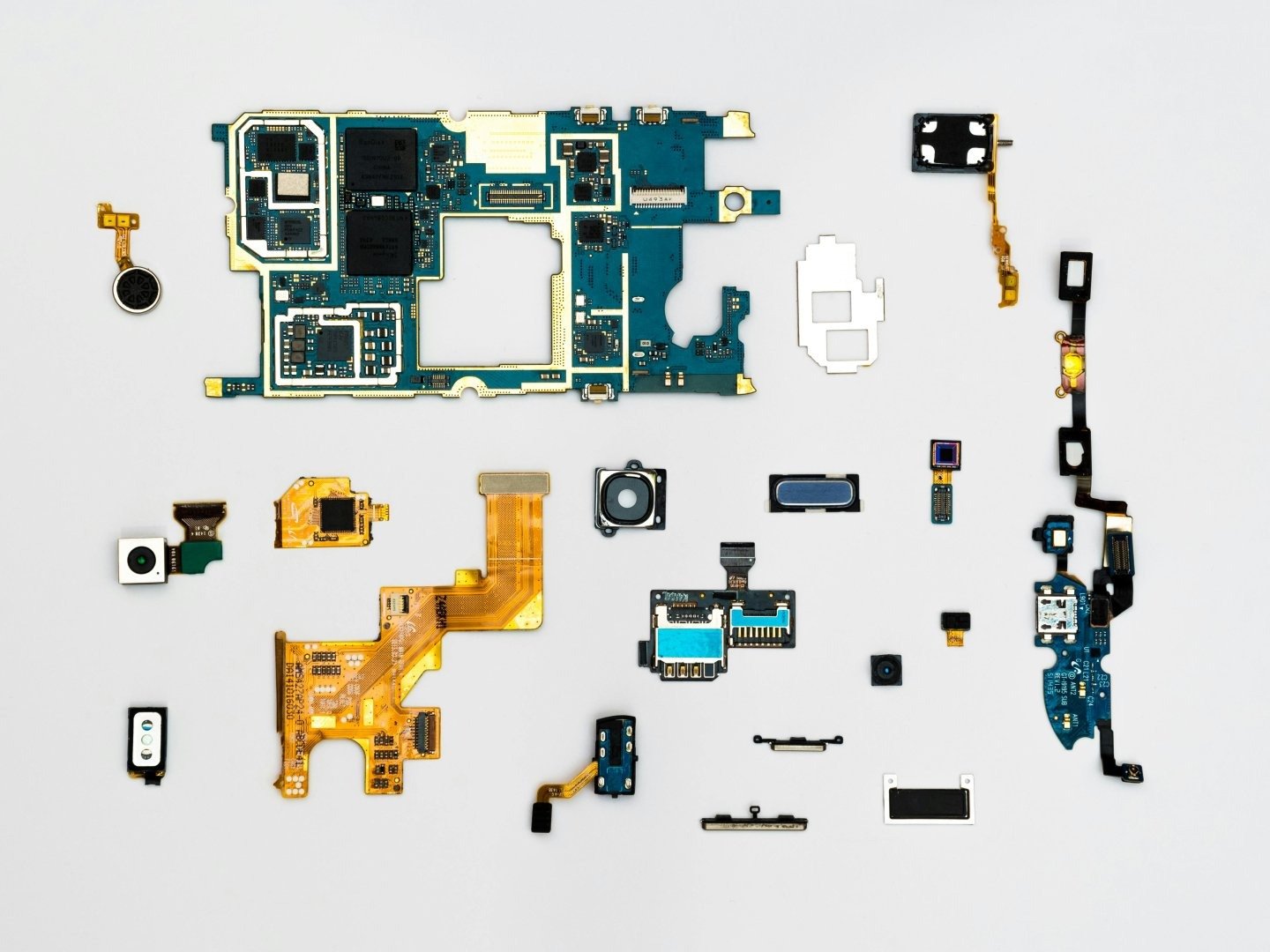

Model compression is a critical area of focus. Techniques like pruning remove redundant connections or parameters from a neural network, effectively shrinking the model’s size. Quantization reduces the precision of the model’s parameters, using fewer bits to represent the same information. These methods can significantly reduce the memory footprint and computational requirements of AI models, making them suitable for deployment on resource-constrained devices like smartphones and embedded systems.

Algorithmic Optimization Strategies

Beyond model size, the efficiency of the algorithms themselves plays a crucial role. Researchers are constantly developing new algorithms that achieve the same or better results with fewer computations. For example, innovations in attention mechanisms and transformer architectures have led to more efficient natural language processing models. Furthermore, techniques like knowledge distillation allow smaller, more efficient models to learn from larger, more complex models, transferring knowledge without replicating the computational burden.

Hardware Acceleration and Specialized AI Chips

Hardware acceleration is another vital component of the efficiency equation. General-purpose CPUs are not always the most efficient for AI workloads, which often involve massive parallel computations. GPUs, originally designed for graphics processing, have become popular for AI due to their parallel processing capabilities. However, specialized AI chips, like Google’s Tensor Processing Units (TPUs), are designed specifically for AI tasks and can offer even greater efficiency gains. These chips are optimized for the specific operations used in neural networks, leading to faster training and inference times.

The Rise of Edge AI

Edge AI, which involves running AI models on devices at the edge of the network rather than in the cloud, is another important trend driving efficiency. By processing data locally, Edge AI reduces the need to transmit large amounts of data to the cloud, saving bandwidth and reducing latency. This is particularly important for applications like autonomous vehicles, robotics, and IoT devices, where real-time decision-making is critical. Edge AI also enhances privacy by keeping sensitive data on the device.

Benefits of Edge Computing for AI

Edge computing offers several key benefits for AI applications:

- Reduced latency: Processing data locally eliminates the need to send data to the cloud, resulting in faster response times.

- Bandwidth savings: By processing data at the edge, less data needs to be transmitted over the network, reducing bandwidth costs.

- Enhanced privacy: Keeping data on the device enhances privacy by minimizing the risk of data breaches during transmission.

- Improved reliability: Edge AI can continue to function even when the network connection is unreliable or unavailable.

Impact on AI Tools and Development

The focus on efficiency is also influencing the development of AI tools and frameworks. Frameworks like TensorFlow and PyTorch are constantly being updated with new features and optimizations to improve performance and reduce resource consumption. Developers are also creating new tools for profiling and optimizing AI models, helping them identify and address bottlenecks. These tools enable developers to build more efficient AI applications from the ground up.

How AI Tools Are Adapting

AI tools are adapting to the demand for efficiency in several ways:

- Optimized kernels: Frameworks like TensorFlow and PyTorch include optimized kernels for common AI operations, taking advantage of hardware acceleration.

- Automatic mixed precision: This technique automatically uses lower-precision data types where possible, reducing memory usage and speeding up computations.

- Quantization-aware training: This technique trains models to be more robust to quantization, minimizing the accuracy loss when the model is quantized.

- Profiling tools: These tools help developers identify performance bottlenecks and optimize their code.

The Environmental Impact of AI Efficiency

The drive for AI efficiency is not just about cost savings and performance gains; it’s also about reducing the environmental impact of AI. Training large AI models can consume a significant amount of energy, contributing to carbon emissions. By making AI models more efficient, researchers and developers can reduce the energy footprint of AI and promote more sustainable AI practices. Organizations like Google are actively working on making their AI infrastructure more energy-efficient.

Future Trends in AI Efficiency

Looking ahead, several trends are likely to further drive efficiency in AI. Neuromorphic computing, which mimics the structure and function of the human brain, holds the promise of ultra-low-power AI. New materials and manufacturing techniques could lead to even more efficient AI chips. And ongoing research into new algorithms and model architectures will continue to push the boundaries of what’s possible.

Key Areas to Watch

- Neuromorphic computing: This emerging field aims to create AI systems that are as energy-efficient as the human brain.

- New materials and manufacturing techniques: Advances in materials science could lead to AI chips that are faster and more energy-efficient.

- Automated machine learning (AutoML): AutoML tools can automate the process of model selection and hyperparameter tuning, helping developers find the most efficient model for their task.

- TinyML: This field focuses on developing AI models that are small enough to run on extremely low-power devices, enabling a wide range of new applications.

The Broader Implications of Efficient AI

The focus on efficiency has broad implications for the AI ecosystem. It makes AI more accessible to a wider range of organizations and individuals, reducing the barriers to entry. It enables new applications of AI in resource-constrained environments. And it promotes more sustainable AI practices, reducing the environmental impact of this transformative technology. As AI becomes more pervasive, efficiency will be a key factor in ensuring its long-term viability and positive impact on society.

In conclusion, the latest developments covered in AI News Today highlight a significant industry-wide push towards greater efficiency in artificial intelligence. This emphasis on optimization, driven by factors ranging from cost reduction to environmental concerns, is reshaping how AI models are designed, trained, and deployed. Looking forward, it will be crucial to monitor advancements in areas like neuromorphic computing and TinyML, as these innovations promise to unlock even greater levels of efficiency and expand the reach of AI to new frontiers. For example, the development of better AI Tools and a useful List of AI Prompts will be essential for AI innovation.